In the third part of the series, our adventure is slowly but surely heading to its climax. All ‘characters’ have been introduced. There’s DotNet Core, the new, misunderstood (or at least by me?) kid with a bunch of talent. Docker, the awesome supporting character that actually deserves his own movie. Surely there’ll be a spin-off! There’s the antagonist, Jenkins, who might end up working along with our main character more then either had hoped for. Then, there’s the mid movie surprise character, Microsoft SQL server!

And then there’s me, the engineer coming from a Java EE background trying to piece it all together.

Let’s just hope this story doesn’t end up on rottentomatoes…

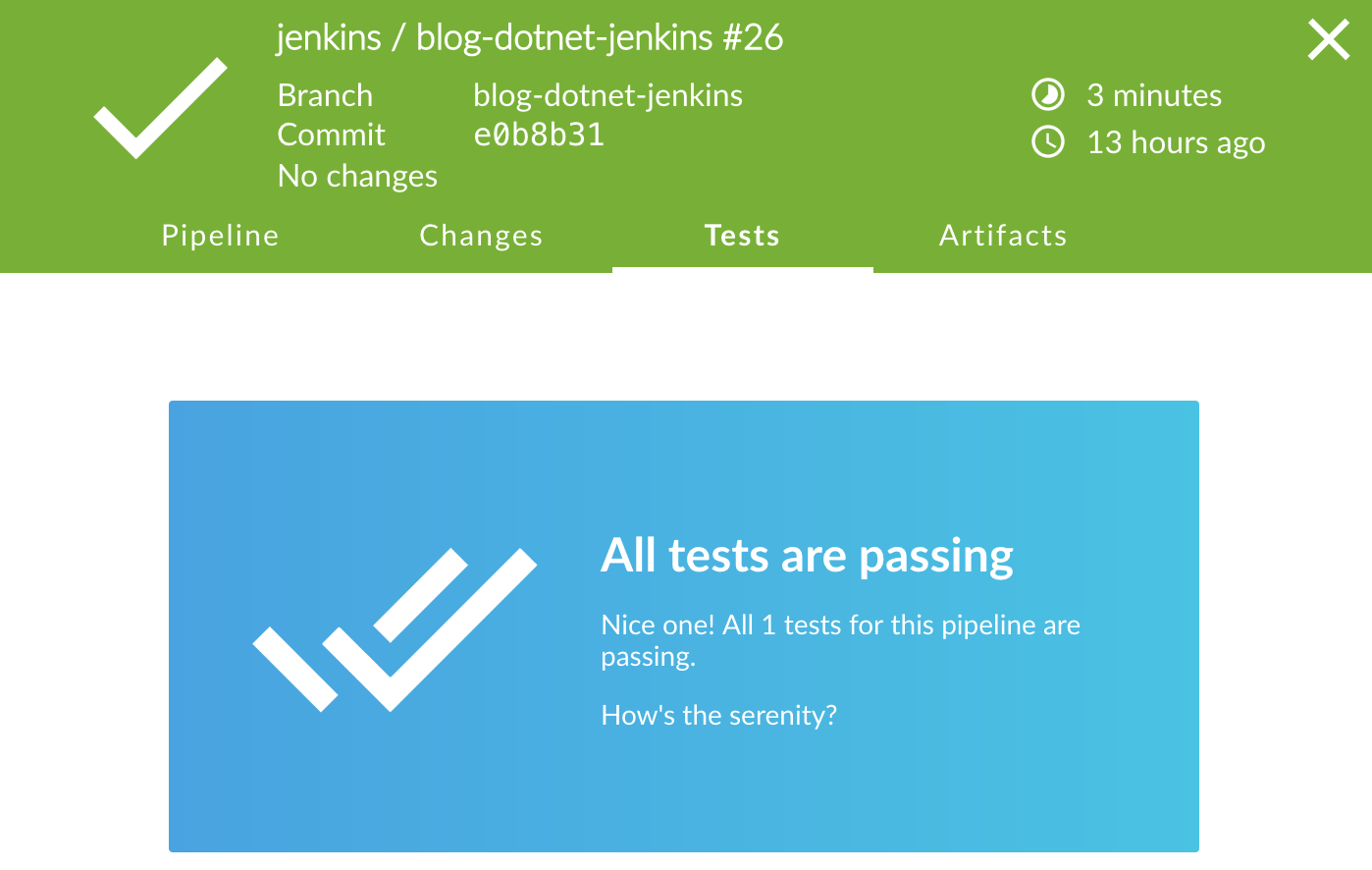

You can tell it's not actually doing much by the green color.

Challenge accepted

In the previous posts ([part I]({{<ref “dotnetcore-adventures-i.md”>}}), [part II]({{<ref “dotnetcore-adventures-ii.md”>}})) I have been building a dotnet core application. Not wanting to start from scratch, I adopted a simple TodoAPI example from the (still excellent) ASP.NET core tutorials. I wound up with a REST api to GET and POST some todo items. This was a good starting point, but for me, I can’t seriously say that I now know dotnet, or even have written something remotely useful in it. The biggest thing missing for me was something that most ‘tutorial’ applications lack, and which every real life application has;

Run time dependencies

I mean, sure it has dependencies on ASP.NET core. But when it comes to deploying and running, it’s a simple application. Very few real services are like that. Services, ironically, often require other services to run. A backend needs databases or messages, a frontend needs a backend, and so on. Even if you go all fancy microservices in your architecture, chances are you’re still managing processes for databases or message queuing. I’m just going to assume you’re not writing your services from http to flat files all yourself. Anyway, inter-process dependencies like that are often the challenging factor in delivering software. So, let’s not shy away from a challenge.

For a todo rest api, the most logical thing would be to add persistence. Right now, if we restart our container, we lose all todo items previously registered. Not the most user-friendly experience. I’m out of metaphor’s to explain this, but when I think persistence and Microsoft technology, I think SQL server. For a long while, it would not have been an option, since it used to run exclusively on Windows. But, a few months back, a new SQL server version was released, which ran on Linux. Not even that, it ran in a Docker container too.

Excellent……cue evil laughter

Core makes everything cooler

So, persistence for a dotnet application. What to framework to use. On the java side of things, we have basically two ‘flavors’ of frameworks. A kind of Object Relation Mapping solution; think Hibernate, if you will. If you’re more into writing pure SQL, and mapping that to objects, you can use a query mapping framework. Think something like myBatis. There are probably a bunch more in both categories, but I’m not writing about Java now.

Looking at the (once again, excellent) dotnet and ASP.NET core tutorials, one quickly stumbles upon something called EntityFrameworkCore. Now, I know I have criticized Microsofts naming strategy for frameworks before. Usually it’s just boring, summarizing what the framework does. Blergh. Same goes here, except…for some reason, I like EntityFrameworkCore. Probably because ‘core’ bit makes it sound more…well..hardCore? If it had just been EntityFramework (which I believe it’s still called in the ‘vanilla’ .NET variant), I’d not be a fan.

Anyway, as you can guess by now, there’s a bunch of tutorials on EntityFrameworkCore. But, none of those actually ‘integrate’ into the Todo tutorial.

But, no worries; I can still program some myself, believe it or not.

So, first off, I can just use my plain old TodoItem class as an entity;

public class TodoItem

{

public string TodoItemID { get; set; }

public string Name { get; set; }

public bool IsComplete { get; set; }

}

The idea, as far as I can tell, is that there is a user-created ‘context’ class, that extends DbContext. So, I made a TodoContext, with the idea it’ll manage all entities for my todo api. In the TodoContext class, I register the entity TodoItem and state which table it is to be saved in. I give the TodoContext class a public property DbSet<TodoItem> TodoItems and all should be good to go for my business methods;

public class TodoContext : DbContext

{

public TodoContext(DbContextOptions<TodoContext> options) : base(options)

{

}

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

modelBuilder.Entity<TodoItem>().ToTable("TodoItem");

}

public DbSet<TodoItem> TodoItems { get; set; }

}

And yes, behold, my ‘business’ logic can now use the TodoContext to do some persistence stuff:

_dbContext.Update(item);

_dbContext.SaveChanges();

Yes, there needs to be a call to .SaveChanges() - Which, for me as a java programmer coming from a Java EE world, is a bit silly. As in, I honestly forgot to do it in my first go. Why isn’t there a container to manage this transactional stuff? But then, when I’m completely honest with myself, in that question lies the answer already. There is no container, it’s not Java EE, and doing something like this is not all that weird even in other languages.

I know, I know, but it’s so much more easy to hate then it is to tolerate, right?

Holding the line

Of course, doing all this work still did not connect it to any SQL server instance, make a database or tables, or actually even run at all. There’s still initialization and setup to do!

So, in our StartUp class, where all injectable services are configured, we add a simple line:

services.AddDbContext<TodoContext>(options =>

options.UseSqlServer(Configuration.GetConnectionString("DefaultConnection")));

And as you can imagine, we add a connectionstring ‘DefaultConnection to the file called AppSettings.json;

"DefaultConnection": "Data Source=localhost,1433;

Initial Catalog=TodoDev;Integrated Security=False;

User ID=sa;Password=xihdG!8uNCavA!Rw"

I’m sure it’s a terrible connection string. But it works. Yes, you know the password to my sql server instance…. Or do you?

That all seems to work fine, assuming the database exists. And here we get into a pickle. Because, I do not want to depend on that. I want EntityFrameworkCore to create it for me. Not just the tables, but the whole database. Luckily, the tutorials help me out there, and tell me how to set up an initializer class, with even some code to populate some data into it. Nice.

Just create a DbInitializer class, and make sure to call the method in the startup class’ configure method. Let’s copy and past…..wait a minute…is that a static method in that initializer example?

Now, there are lines to be held. The url you are currently browsing is staticsmustdie.net - I cannot go and claim we need a static method for something as silly as database initialization. It’s not necessary, it’s lazy, and it must be stopped. So, as that Rage Against the Machine song goes….yeah… I won’t do what you tell me.

So what do I do? Simple enough, drop the static, inject a new instance. Create testable code. Boom.

public class DbInitializer

{

public void Initialize(TodoContext context)

{

context.Database.EnsureCreated();

}

}

public void ConfigureServices(...)

{

// other stuff going on as well

services.AddSingleton<DbInitializer,DbInitializer>();

}

public void Configure(...)

{

// other stuff going on as well

dbInitializer.Initialize(dbContext);

}

This leaves me with a nice DbInitializer class, where I can add some dummy data if I want as well. But honestly, if that were to be all the code in a DbInitializer in a ‘real’ project, I would probably just inline the context.Database.ensureCreated() call to the StartUp’s Configure method.

But I wanted to make a statement. Against statics.

Composing. Like Mozart. But less classical

So, our service is coming along nicely. Using a local SQL Server (thanks to Docker!), this all just works. And I have to hand it out here to the ASP.NET core guys. That’s pretty awesome. Back in the day, I had a harder time getting started with any JPA framework / configuration. But that might have been me as well. Or the overwhelming amount of frameworks and choices. Oh well.

Anyway, this all has no value unless it is delivered to production, right? So, how do ensure that there’s a SQL Server instance running in production? And that the TodoApi service can connect to it? Well, let’s keep it simple enough for now, and use docker-compose for that. Docker Compose is a tool that allows you to put container configuration (mind you, container, not image) in a docker-compose.yml file. You can put in multiple containers, configure them, and then use something simple as docker-compose up to ensure all containers are running.

So, how does that look for our TodoApi?

version: "2"

services:

mssql:

image: microsoft/mssql-server-linux

environment:

ACCEPT_EULA: "Y"

SA_PASSWORD: "xihdG!8uNCavA!Rw"

ports:

- 1433:1433

networks:

- production

todo-api:

image: corstijank/blog-dotnet-jenkins:2.0-59

ports:

- 5000:5000

networks:

- production

depends_on:

- mssql

networks:

production:

I like to think this is all pretty self-explanatory, but still, I’m going to give it a quick round-up:

version: "2"- is just what version of the docker-compose syntax we’re using. I believe ‘3’ or ‘3.1’ is out, but haven’t gotten around to looking into that yet.services- Here are good bits; here declared are which services that need to be running according to this compose file. This translates into one, or more running containersmssqlis our SQL Server service. It’s based on a docker image as you can see, has some environment variables (uh oh…there’s my password again…OR IS IT?), and describes what ports to publish. I’ll get to the networking in a bittodo-apiis the actual dotnet service. It publishes on port 5000, and depends on mssql. That at least ensure that the mssql is started first (sidenote: This does not necessarily mean todo-api gets started after SQL Server has finished starting. There’s no check for that in here, for now)

networks- I verbosely declare a ‘docker network’ where the services are going to attach to. Amongst things, this ensures that services on the same network, can find each other by simple dns, using the other service’s name. So the hostname for SQL server service, from the todo-api container, is simplymssql- as they are both on the same docker network.

Now, it gets interesting. Remember that dreaded appsettings.json? The connection string clearly had SQL Server running on localhost. But if we run these containers, from the perspective of the todo-api container, SQL Server is not running on localhost, but rather on the hostname mssql

So, there is a need for another JSON file. I called it appsettings.docker.json. In this file, I switched out localhost for mssql. I made sure to edit the project.json file to include it when publishing the app, so it’ll get picked up into our container. Nice.

Now, I know pretty much all documentation and examples state to call it the JSON file something like

appsettings.production.jsonor for whichever environment it is. But, for building a docker container, I am against it those kind naming conventions. It implies something that might not be true. The settings file is going into the container. And the container does not know if it is runningproductionorstagingor whatever. So, I prefer to stick to a simple name that implies where the file is going;appsettings.docker.json

Final steps

Finally, we need to make sure this is all executed in the pipeline when deploying to production. Tricky point here being the Todo-Api image name in the compose file. It points to a specific build number. So, when deploying, we need to:

- Automatically edit the compose file and switch in the new image label

- Run docker compose

- Create a git commit for our edited compose file and push it

That sounds complicated, but it’s actually not that bad:

stage('Run in production'){

agent { label 'hasDocker' }

steps{

dir('Environments/Production'){

sshagent(['corstijank-ssh']){

sh """ sed -ie 's#corstijank/blog-dotnet-jenkins:.*#${IMAGETAG_VERSIONED}#g' docker-compose.yml

docker-compose up -d

git config user.email "jenkins@staticsmustdie.net"

git config user.name "Jenkins"

git checkout master

git commit -am "updated to ${IMAGETAG_VERSIONED}"

git push """

}

}

}

}

Probably the scariest part there is the sed command. Yes, that is the UNIX Stream EDitor. If you’re on Mac OS or Linux, ‘man’ it. It’s awesome. I’m sure there’s something equivalent on Windows that allows you to do that in a one-liner. Or rather, I hope. If not, there’s always BASH on Windows 10.

So a quick explanation of the sed line:

sed -ie 's#corstijank/blog-dotnet-jenkins:.*#${IMAGETAG_VERSIONED}#g' docker-compose.yml

^ ^ ^ ^ ^

sub a string matching this regex with this string all matches in this file

The rest are basic git commands. I know, I could probably configure user email and name outside or globally. On the other hand, do I really want to depend on that? I don’t think this is too bad. Same goes for the checkout master command. It just ensures we’re working on the master branch (or whichever branch you want to commit on). You could assume you are in some state that might already be there, but why not make sure?

Other side note: If you keep the compose file in the same git repository as the code, doing this might trigger another build if you’re using a git hook. Some simple alternative solutions to circumvent this:

- Use a smarter git hook that excludes pushes from Jenkins

- Push to a separate branch that doesn’t start a build

- Push all infrastructure configuration to a separate git repository, separating infrastructure and business code.

I’m not going to state one is better then the other. Sorry, it’s just a choice. And one that could warrant a whole separate blog post.

You could also do without the commit, but then you lose some pretty nice auditing.

Finally, note I am using git over SSH. It’s just a bit easier to configure in Jenkins using the SSHAgent as in the example. There are workarounds when using http(s), but they basically all include substituting username and password in the url, which is just…well…I don’t like it.

All’s well, that ends well

Well it’s been long run, but here we go:

And there you have it. A working delivery pipeline, including Microsoft’s own SQL server. Complete with database initialization, continuous delivery, you can even see in git log which version got deployed to production when.

Also, you have managed to read through this ridiculously long blog post. It was too long in the making. Mostly due to circumstances such as vacation and the common cold, but also due to the fact that it’s not a small change to achieve. I could have split the topic up into two posts, but then I’d have to leave one blog post with a pipeline that either wasn’t working, or wasn’t representative for the way the code worked. Either was a no-no for me.

But, I’m glad you stuck with me to the end ;-)

Until the next time!