In my previous post on this topic, I somehow got the jitters to get started with .NET. Or more specifically, .NET core. Being stubborn as I am, I have created a simple delivery pipeline in Jenkins, for building and deploying a .NET core docker container.

Now, this might sound like hell freezing over. Me, programming .NET? Blasphemy! Except one little thing. For my previous post, I didn’t actually write one line of C# code. Now, I don’t want to give off the impression Im uncomfortable in any programming language, and so I made sure to end the last blog post with some unfulfilled promises of creating a more serious project from this ‘Hello world’ example.

Time to pay up.

Previously..... on StaticsMustDie

The freedom of choice

So, I spent some time fiddling around, on how C# services work. To create any kind of web service, there is the ASP.NET core framework. ASP.NET core and the base .NET core runtime seem kind of coupled in a non-technical way. Of course, nowadays, you’d be hard pressed not to write some sort of web application. And so far, to my knowledge, ASP.NET is the way to go for all the .NET folks.

Still, the distinction between .NET core and ASP.NET core might allow for some possible alternatives in the future? Or, maybe not. It’s kind of like how, back in the day Java EE (back then still J2EE 1.2, 1.3) had the same kind of monopoly on the JVM when it came to developing back-end software (we didn’t do any of your fancy ‘web services’ back then). At one point, Spring came along and provided a (more pragmatic, in this case) alternative. Competition grew, and now, Java developers have a bunch of choices to make on what they use. But, ultimately, the choice kind of boils down to some stylistic differences, about which people love to get all religious. Yay for choice, right?

Anyway, I digress. Back to the matter at hand.

To Code, or not to Code

So, the choice for ASP.NET core was simple. Now, was I going to write a small service myself? I had originally planned too, but then, life got in the way. The whole household came down with the flu, and suddenly I was feeling kind of pressed for time. If I’m going to do this blog thing, I want to have at least some regularity to it, you know?

The thing is, writing my own service…was it really that important? I could follow a tutorial like anybody else. If I were to blog on that, I would just reproduce the tutorial, and that would not be up to the standards I want to hold myself to. I want to focus on the interesting points, Docker, Jenkins, unit testing, acceptance testing.

So, instead of writing about how to implement REST on C#/.NET core, I’ll be starting with some simple tutorial code here. The ASP.NET core documentation site has some excellent tutorials, and I really recommend you check them out. The code I’m starting with is the code explained the ASP.NET core tutorial on creating a simple rest service.

Lessons learned

So, I had refactor some stuff, ‘git mv’ some directory around, and basically wound up with something which was starting to look like a real project folder;

/global.json

/TodoApi/Jenkinsfile

/TodoApi/Dockerfile

/TodoApi/project.json

/TodoApi/**Code**

Our Dockerfile is clean and simple; and I’m not going to bother going into detail on it here. All I had to do was edit the Jenkinsfile (read the previous post to get up to speed on that) to make sure any running version of the container would be stopped before the deploy. This was not necesary before as the previous iteration of our container was, well..just printing hello world

So, all should be well. Fire up the docker container. Query the service. Bask in .NET glory.

Nope; ran into a few things that are well worth noting on here.

Inversion of Control. Just on a different level

As I said, it was all relatively simple to start. The container ran, but, when I tried to do a simple GET. It failed. Why? I got a simple Empty Response as body text on my response. Why? Well there we have to look a bit deeper into the C# code, what it does, and how that holds up in our delivery choices.

So, what does the code that start an http service look like?

var host = new WebHostBuilder()

.UseKestrel()

.UseContentRoot(Directory.GetCurrentDirectory())

.UseIISIntegration()

.UseStartup<Startup>()

.Build();

host.run();

My first thought seeing this was ‘Oh look, a simple builder that starts some kind of service. Nice. Just like we do it in Java in every (micro) service framework that is not JEE. They call it ‘Kestrel’? Well, that is a surprisingly non-utilitarian name for a Microsoft coined term. Shouldn’t it be called “InProcessIISAlternative”? Possibly with IIS spelled out?

</sarcasm>

So…going on…Use current directory as content root. Sure, integrate with IIS if you find it (tip: You won’t). Use an stance of Startup as the startup object. All understandable. So why isn’t the bloody thing working?!

Well, the problem is not in the code that’s there. The problem is in the code that is not there. By default, a service started like this only listens to localhost:5000. That seems logical enough, right? It should work, right? Well yes, if you’re accessing form localhost.

But, in our case, we are not. We are using Docker. Which means the service is running in a container. The container, from the perspective of our C# service, is localhost. That means that anything accessing the container, almost by definition, will not be coming from localhost, but rather as something more remote from our application’s perspective.

So, how to fix this? Well if you google around, you’re going to find a whole lot of information on configuration files you can edit. Maybe even for various stages like production or testing. I honestly didn’t really read into it that much. Not because I don’t care, but rather;

Application configuration files for network settings make no sense when you’re developing in containers. Whatever you configure in the configuration file, you can, and will override it when you deploy the container. For example, you could specify it with

-por have Docker decide for you, or just ignore the port alltogether. Point is, the port that is going to be used to access your servie is not decided by your service. So don’t add another configuration file. Just hardcode it. You won’t be running any other services inside your container anyway, so port collisions are not an issue.

And hardcode I did;

var host = new WebHostBuilder()

.UseUrls("http://0.0.0.0:5000/")

.UseContentRoot(Directory.GetCurrentDirectory())

.UseStartup<Startup>()

.Build();

Note I also deleted the IIS integration. I’ll have none of your shenanigans, IIS.

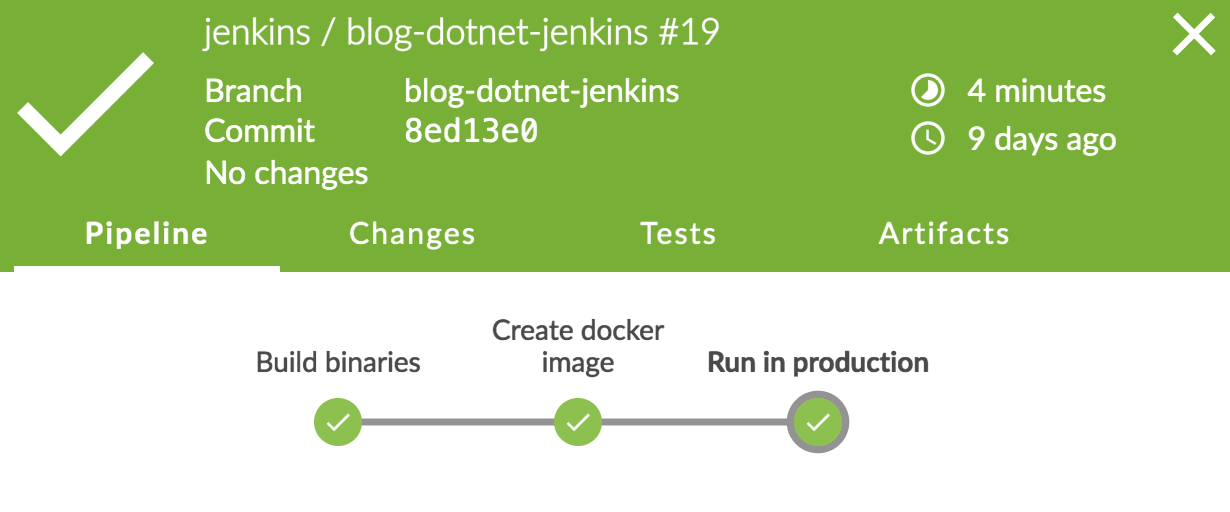

After this, we end up with a runnable container;

Crash test dummy

So, I have a service running, a delivery pipeline from the previous blog post, and actual value we can deliver to our shell using clients. At this point, even I am considering it might be smart to start adding some tests. The common practice for .NET projects seems to be to put the tests in a separate ‘project’ if you will. So, create an empty directory, run dotnet new -t xunittest and Bob’s your uncle.

From here, it’s time implement the unit test. Again, not going to go into that much here. Suffice to say I managed to create something. If you’re interested on the unit test capabilities in (ASP).NET Core. There are some really excellent tutorials here - what are you even doing still reading this?

Oh right. The Jenkins pipeline. So, as you might know (if you read the tutorials!) the command to run tests is a simple dotnet test. If you want to capture the results in a file, you should add some parameters to dotnet call. Still, should be easy enough to integrate into our pipeline.

Also, since these are unit tests, I want to make sure they are run before any building of docker images or whatsoever. Here’s the code in the Jenkinsfile for the (renewed!) building stage:

stage('Build binaries'){

// Run this stage in a docker container with the dotnet sdk

agent { docker 'microsoft/dotnet:latest'}

steps{

git url: 'https://github.com/corstijank/blog-dotnet-jenkins.git'

sh 'cd TodoApi && dotnet restore'

sh 'cd TodoApi.Test && dotnet restore'

sh 'cd TodoApi.Test && dotnet test -xml xunit-results.xml'

sh 'cd TodoApi && dotnet publish project.json -c Release -r ubuntu.14.04-x64 -o ./publish'

stash includes: 'TodoApi/publish/**', name: 'prod_bins'

}

post{

always{

step([$class : 'XUnitBuilder',

thresholds: [[$class: 'FailedThreshold', failedThreshold: '1']],

tools : [[$class: 'XUnitDotNetTestType', pattern: '**/xunit-results.xml']]])

}

}

}

There are basically two things that are important here. First off, and most simple, is the ‘dotnet test’ directive. We pipe the output to XML; which we are going to use in recording the results.

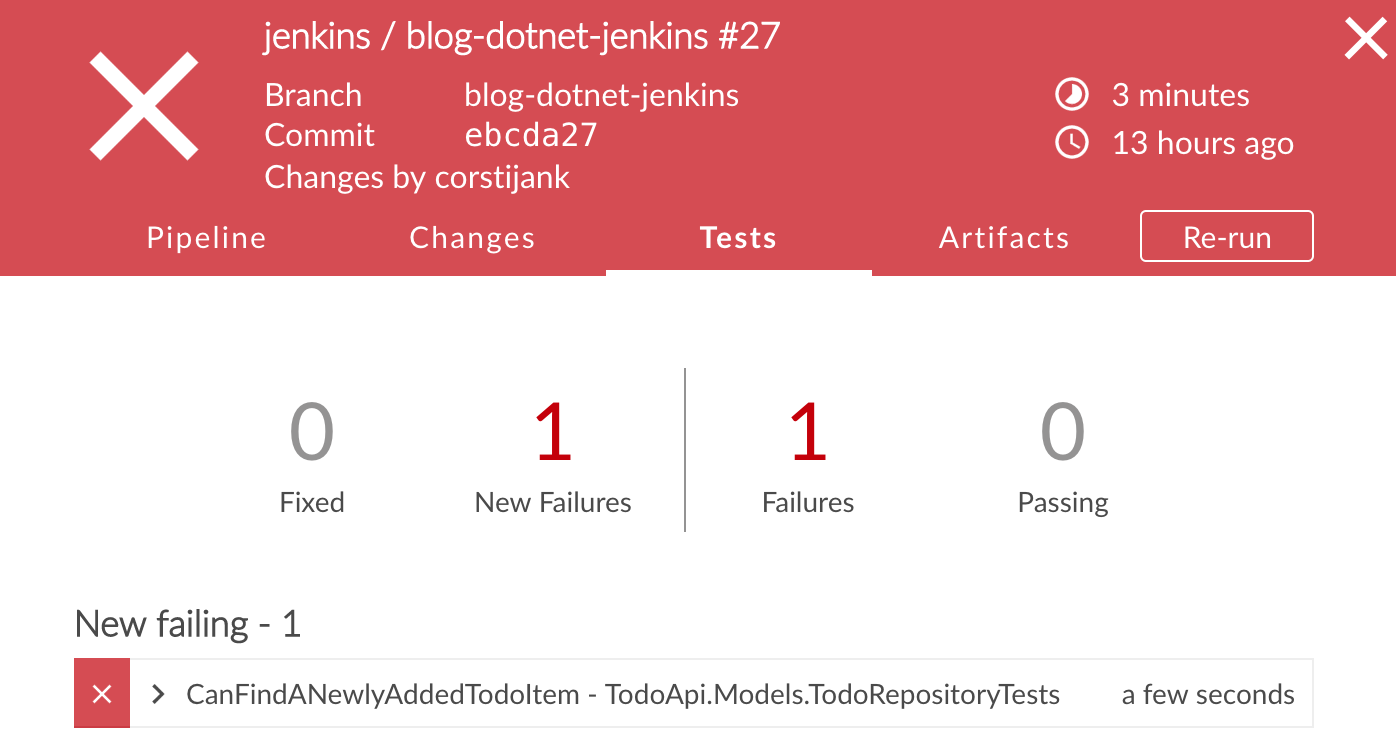

In a Jenkinsfile, each stage needs at least some steps, obviously. But, we can also add a post block. In this block, we can nest an always block. Here, we can record our results using the XUnit plugin.

When you record test results in a

Jenkinsfile, be sure to always put the recording in something like apost/alwaysblock, or if you’re using pure script, use atry/finallyconstruction. If your tests fail, the exit code will result in an exception. If you put the recording of your test results as a simple sequential step after the testing, it would mean execution would never reach the recording!

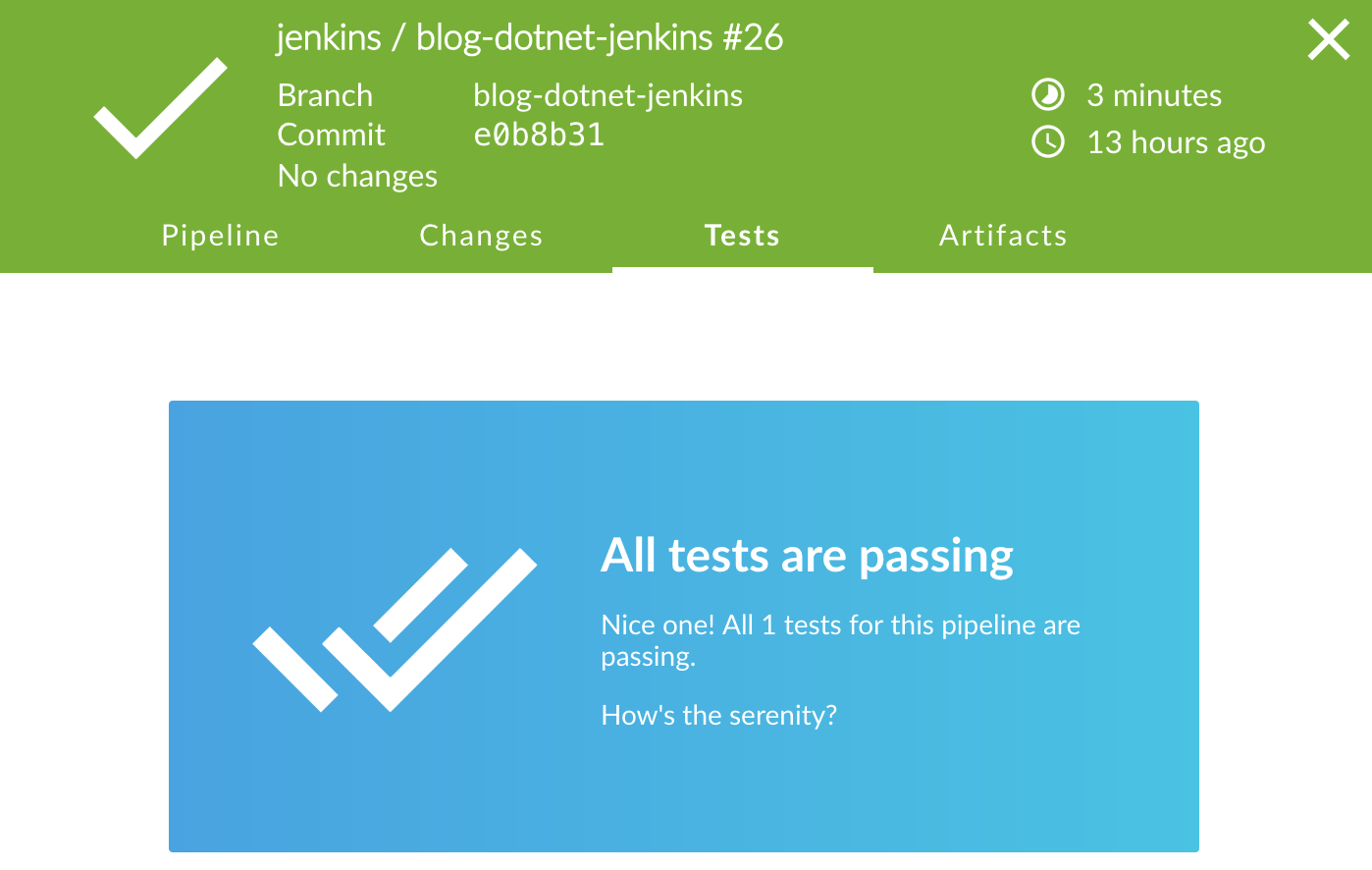

The serenity was not at a level I had hoped it to be

Everything going as expected...

And… that actually concludes integrating simple unit testing. It really was that easy.

At first it might look like that amount of low-level dotnet calls in our building stage might warrant some other, more abstract build tool to manage. But so far, I really don’t mind it. Having to, for example, fix the ordering between my source and test projects doesn’t seem like a big deal at all if you keep things small. For bigger things, well NuGet might help you with some dependency management there.

There are probably a whole bunch of cases where the ‘low-level’ attitude of the dotnet cli is going to fall short. I’m no experienced .NET developer. And I’m sure either Visual Studio or Team Foundation Server is going to offer some nice sugar on top, if they are not already. I don’t know.

I’m just using VSCode and Jenkins.

And I don’t feel much need for anything else yet.

Wrap-up

So, that concludes the second part, in what looks like is going to be a three part adventure. The Jenkins pipeline we explored in the [previous part]({{<ref “dotnetcore-adventures-i.md”>}}) was a fun introduction, but it was mostly dealing with Jenkins. This time around, we got into some interesting lessons on using ASP.NET Core with Docker, and what it would mean for configuration management.

Also, it was very nice to see how relatively easy it is to integrate test results from the cli into Jenkins. I’ll admit that using the step method is still a bit rough. I would rather use something like xunit **/xunit-results.xml -t XUnitDotNetTest or something. But I’m sure the authors of the Jenkins plugin are going to get on that sometime.

For the next post I plan on getting more creative; we have all the ground work laid out now. Let’s see what we can do with acceptance testing, docker-compose, and our Jenkins pipeline, to tear up and tear down a set of containers and some virtual networking as our acceptance testing environment. That’s when things start to look really interesting, I think.

Again, if you’re curious about the full sources, or just want to peek at the complete picture, you can check out the repository at github.